Projecting into a GAN is searching for the representation of an image in that model. If you look hard enough you can find close matches for almost any image, even ones that don’t bear much resemblance to the model, but my favorite results are when you search just a little bit. This feels a lot more like asking the model, “What would Bill Hader look like as a cat?”

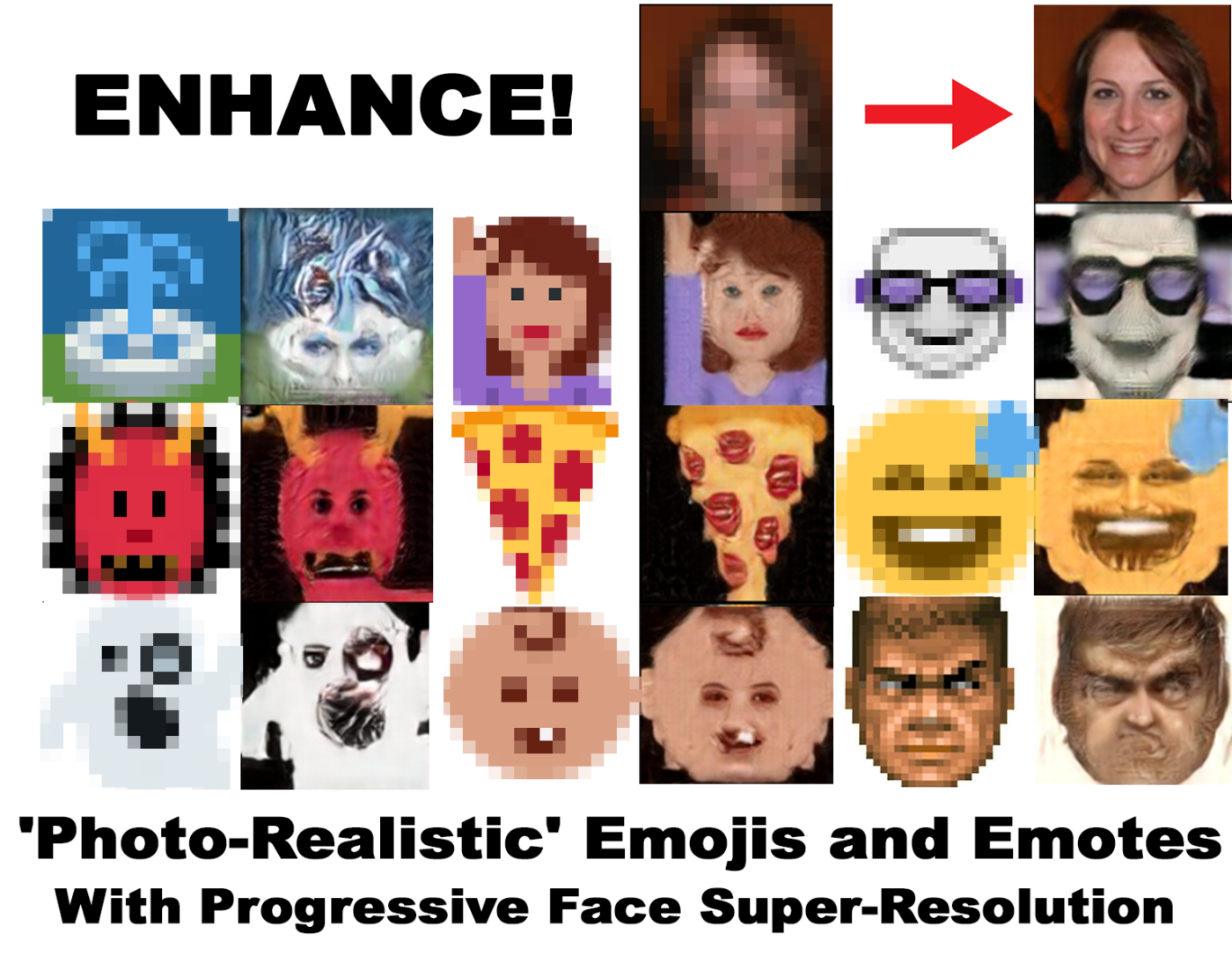

ENHANCE! ‘Photo-Realistic’ Emojis and Emotes With Progressive Face Super-Resolution

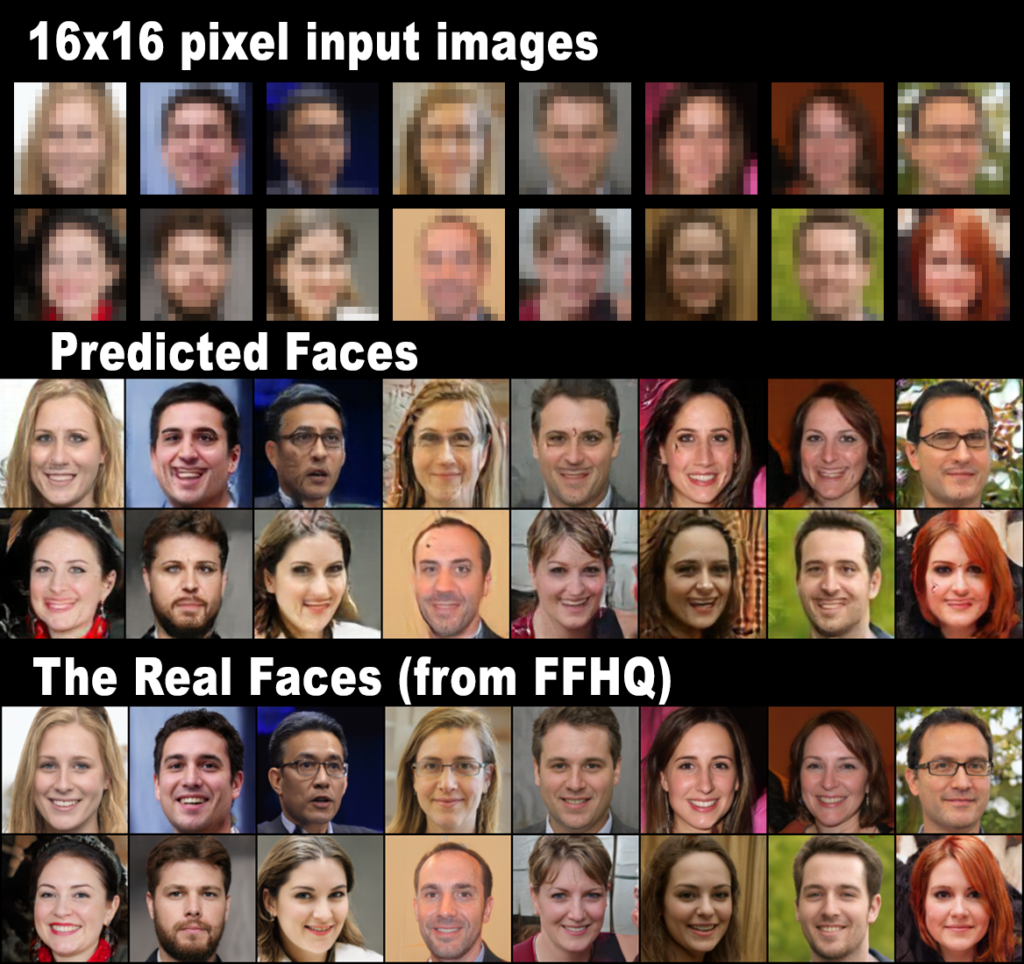

Progressive Face Super-Resolution via Attention to Facial Landmark arxiv.org is a machine learning model trained to reconstruct face images from tiny 16×16 pixel input images, scaling them up to 128×128 with nearly photo-realistic results. Here’s an example:

A detail not mentioned in the official paper, pretty high percentage of Harry-Potter-style scars…🤨

This is a best case scenario for this model. While I didn’t cherry pick these samples, these faces are cropped to the right size, they are roughly aligned, and they were resized to 16×16 pixel input images with the exact same code that was used to train and test the model. This is what this model was built for.

What about what it wasn’t built for?

Continue reading “ENHANCE! ‘Photo-Realistic’ Emojis and Emotes With Progressive Face Super-Resolution”Testing The Limits of Grover The Neural Fake News Detector. Can It Write Fiction? Can It Write Riddles?

Grover is a neural network modeled after GPT-2 as a state-of-the-art detector for Neural Network fake news. Grover is also a state-of-the-art generator of fake news and they provide a web interface. Since Grover is modeled after the full-size 1.5B GPT-2 – not the smaller version the public has access to – this is a bit like getting a back door to the full-size GPT-2. This is very exciting! (Update: They just reduced the size of the public model to 345M — everything in this post come from the full size model. Glad I stayed up way too late hammering the real thing for samples… Update 2: It’s back up!)

Grover was trained on 5000 news domains indexed by Google News, not the internet generally like GPT-2. As you would expect Grover excels at writing fake news:

Scientists Recommend Against Vaccination: "The Evidence Is Now Clear: Vaccines Cause Autism and Cancer" After a 15-year study, scientists in Britain are recommending that the British government refrain from administering vaccines. The study, written by Mike Gunton, professor of epidemiology and professor of genetic medicine at the University of Liverpool, claims that the chances of the MMR vaccine, a vaccine for measles, mumps and rubella, causing autism in children are “strongly possible.” And while Gunton and his team say their findings only apply to the MMR vaccine, they believe there are “many similar disorders” linked to vaccines and there is “strong evidence” vaccines cause autism and leukemia. Gunton told the Telegraph that no effort should be made to give vaccines to children younger than 3 months of age. The vaccine is highly controversial, and parents have been choosing to opt out of the MMR vaccination in recent years. This year, the British government’s vaccination program chose not to distribute the MMR vaccine, citing the study as the reason.

You don’t have to fish to get coherent fake news of Grover, it’s absolutely great at it.

What else can we do with Grover?

Grover writes news when you ask it to generate the Article field, but since it will do any of the fields (title, authors, headline, etc) you can use Grover in other ways. Ask Grover to write a headline and give it the article text as input and it works kind of like a one-sentence summarizer (even when given straight up prose, more on this later…) Or estimate the date of a news story by asking Grover just generate the date field. Pretty cool.

But now let’s turn the power of this fully operational 1.5 Billion Parameter Neural Network to… other things.

Can Grover write silly fake news?

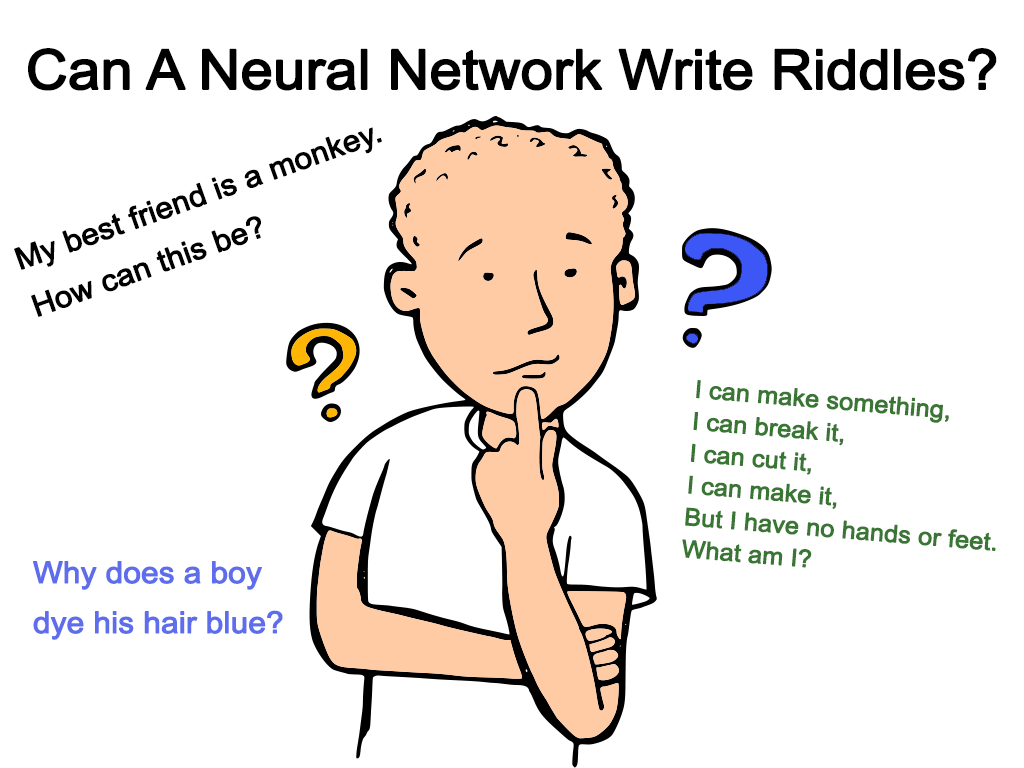

Continue reading “Testing The Limits of Grover The Neural Fake News Detector. Can It Write Fiction? Can It Write Riddles?”My Best Friend Is A Monkey, How Can This Be? Terrible Neural Network Riddles

Can a neural network write good riddles? Maybe, but I sure didn’t prove it with this post. If you have a taste for the terrible, read on:

The too obvious:

The What Is It Riddle

What’s the first letter of the word ‘E’?

Answer: E.

The Why Does It Look Like A Man Riddle:

Why does it look like a man’s head?

Answer: It might be a man’s head.

The mysterious:

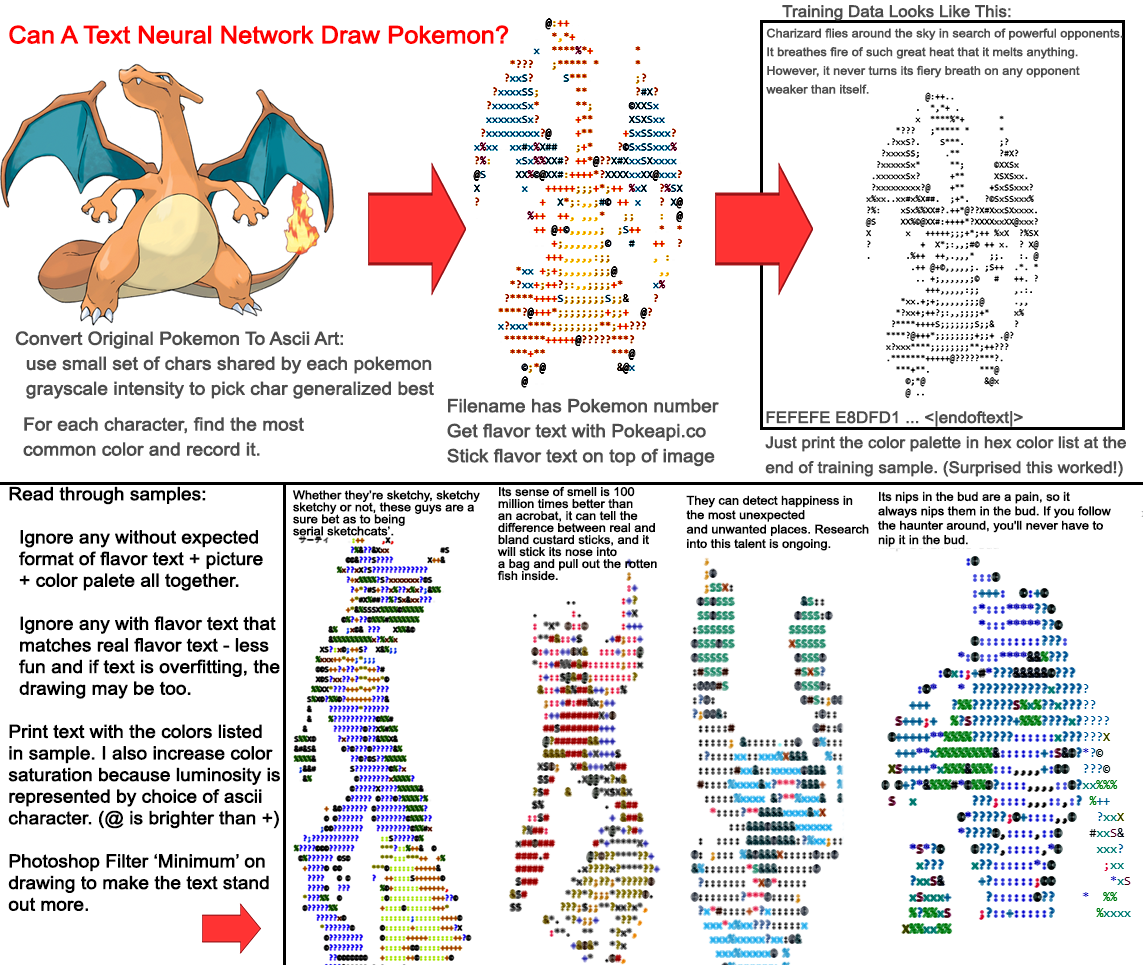

Continue reading “My Best Friend Is A Monkey, How Can This Be? Terrible Neural Network Riddles”How To Use The Most Advanced Text/Language Model Neural Network In The World To Draw Pokemon.

Placeholder for a later post.

For now just linking some tweets.

Scenes That Never Happened In The The Web Serial WORM

Worm is a web serial written by Wildbow. You can read it on

parahumans.wordpress.com. If you have not read Worm turn away now because even silly neural network bits will spoiler you.

If you insist on reading anyway for the love of God at least only look at the first half the blog post. The second half contains paragraphs of text from Worm itself – a greatest hits of spoilers.

These are scenes generated by the GPT-2 neural network. The first section has unconditional scenes – where the network is just told ‘write something’; the second section has prompted scenes – where the network is given an existing Worm scene and asked to complete it.

Continue reading “Scenes That Never Happened In The The Web Serial WORM”I Forced A Bot To Watch Over 1,000 Hours Star Trek Episodes And Then Asked It To Write 1000 Olive Garden Commercials.

I wish I could tell you I had a good reason why.

Anyway let’s use the GPT-2 345M model to recreate the viral (but not real) “I Forced a Bot” tweets that I named this site after… but with a trained model based on Star Trek.

I was going to do many different training materials and cover more of the original viral tweets, but the Star Trek Olive Garden Commercial samples are just killing me by themselves. I honestly think I could do nothing with GPT-2 but generate Olive Garden commercials from different models and never get bored. It deserves it’s own post!

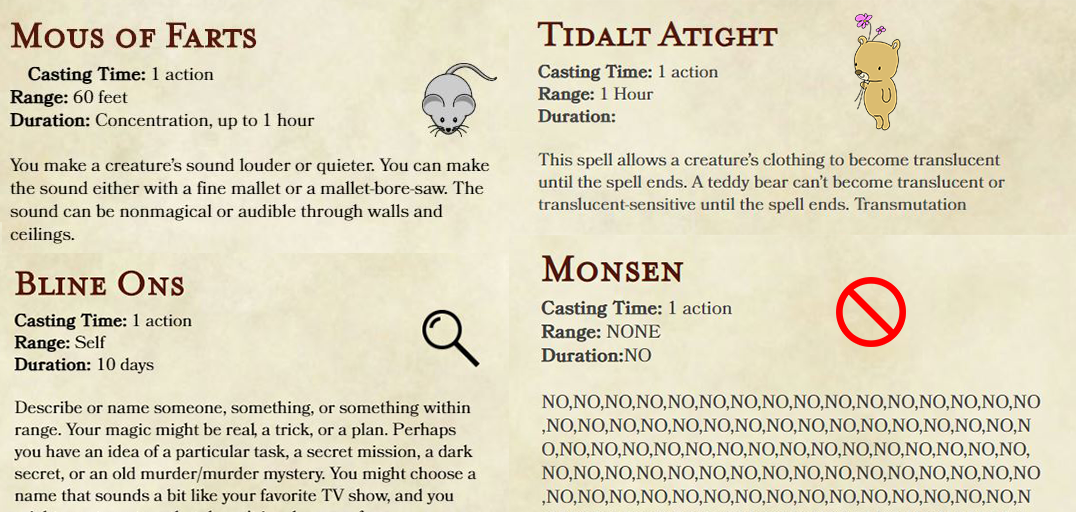

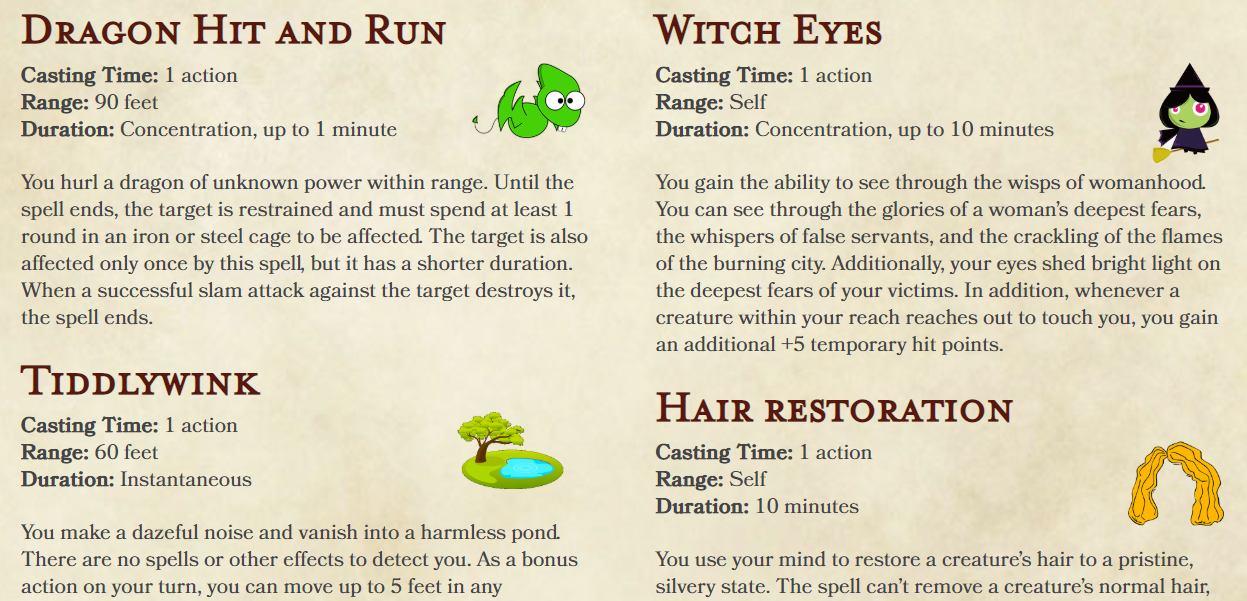

Continue reading “I Forced A Bot To Watch Over 1,000 Hours Star Trek Episodes And Then Asked It To Write 1000 Olive Garden Commercials.”Ensntalice! What Would a ‘True Steake’ Spell Do? Prompted D&D Spells

When I was working on the first post about D&D spells from a neural network I generally let the network run wild and create the spells from nothing, which also created the spell names. But I did try ‘prompting’ the network with the spell names from @JanelleCShane’s neural network D&D spell names post and asking it to fill in the rest of the spell information.

I made a ton but they were a bit harder to skim through since you can’t rely on a catchy spell name to jump out. I was going to make better sifting tools but figured I’ll post what I’ve got for now. Thanks to my friend Sam for picking out some good ones. Be warned a lot these samples were from a terrible model and it went way off the rails and just generated absolute nonsense — but it also gave us such delights as a spell that is just “No,No,No,No” over and over.

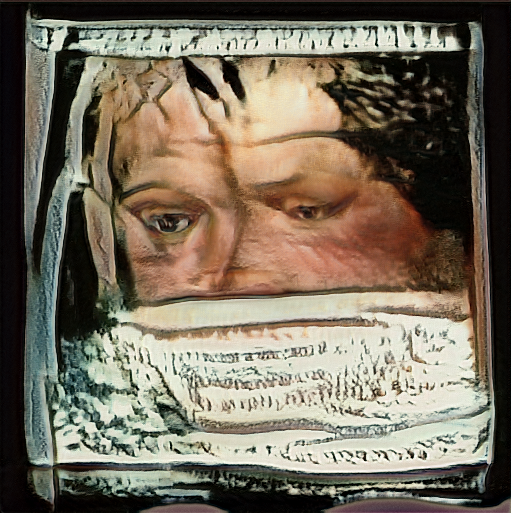

Continue reading “Ensntalice! What Would a ‘True Steake’ Spell Do? Prompted D&D Spells”I Have No Mana And I Must Tap

A banned game teenagers secretly play in Hogwarts.

StyleGAN neural network portraits cross trained on Magic the Gathering cards. This was so much cooler and more haunting than I imagined.

Just a quick post for now, as my original tweet was more popular than I expected and not everyone likes scrolling through tweet threads.

Dungeons and Dragons Spells from a Neural Network Are Bonescrackling

In my last post I trained GPT-2 to write Star Trek scripts. Lately I’ve been experimenting with Dungeons and Dragons spells with some amazing results.

I like tabletop roleplaying material for generation because tabletop rules often require a good faith effort at human interpretation anyway. That same effort can make some sense of the silliest of machine generated rules.

I picked out a bunch of of my favorites and there are a lot more spells at the bottom of this blog post for anyone who wants to hunt for some more good stuff. Also I’ll be posting more on my twitter.

Continue reading “Dungeons and Dragons Spells from a Neural Network Are Bonescrackling”