Update: I haven’t updated this site since 2019 and I should probably take it down…

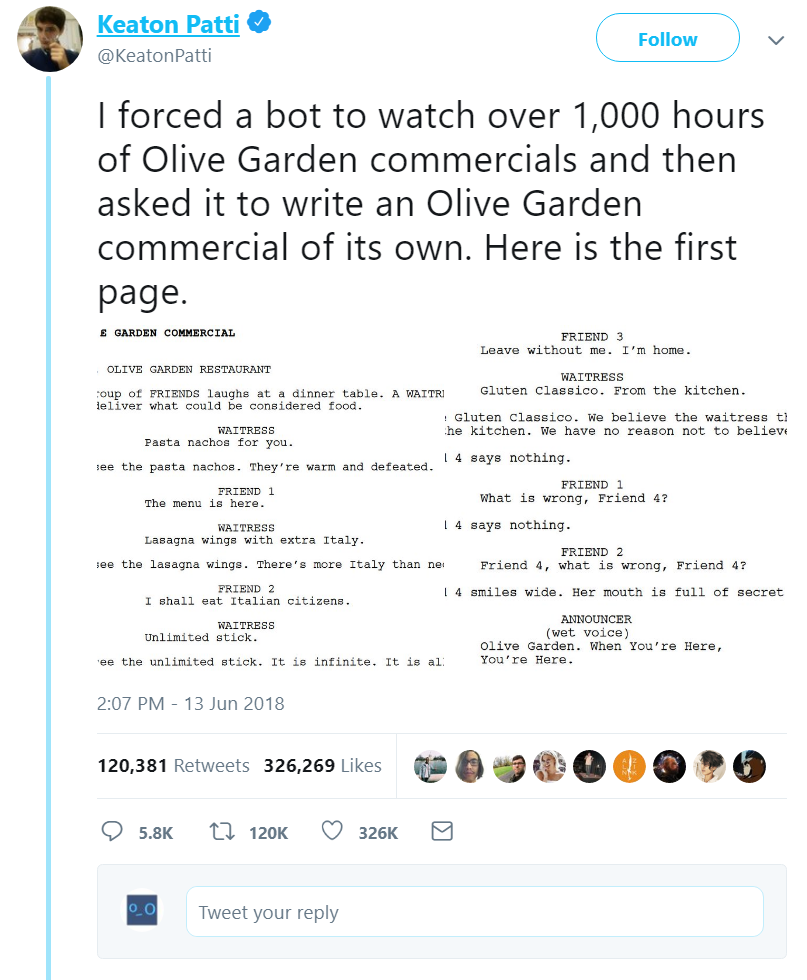

You may have seen “I forced a bot to watch” posts where someone claims to have “forced a bot” to read or watch hours of video and it wrote its own hilarious version like:

Those were funny, but fake.

But it’s possible to do this for real! One of the most foundation posts on this area was Andrej’s Karpathy’s The Unreasonable Effectiveness of Recurrent Neural Networks on how a relatively simple neural network can generate Shakespeare, Wikipedia, Scientific Papers, or baby names. Janelle Shane has been writing up funny examples for years.

But the latest progress in this space is stunning. It used to be impressive if the generated text held a single idea for a few sentences in a row. But OpenAI’s GPT-2 remains coherent for multiple pages. Even though all it was trained to do is predict the next word in a sequence, it can do a lot of other tasks like summarizing or translating if you can frame these tasks in terms of text prediction.

OpenAI isn’t releasing thee full version of their model to the public due to concerns about malicious applications of the technology. But they did release the ‘much smaller’ model and it’s still shockingly impressive!

Examples on this site generated using nshepperd’s finetuning fork: https://github.com/nshepperd/gpt-2.

Update: I need to update this, doing a lot more than GPT-2 and text model now, and that’s not the most updated fine-tuning fork, try https://github.com/mkturkcan/GPTune and there are other options in the works.

Find Me:

twitter.com/jonathanfly

youtube.com

https://www.tiktok.com/@jonathanflyfly

Also at these, which I don’t really use, but maybe someday:

iforcedabot.tumblr.com

instagram.com/forcedabot

facebook.com/iforcedabot