Projecting into a GAN is searching for the representation of an image in that model. If you look hard enough you can find close matches for almost any image, even ones that don’t bear much resemblance to the model, but my favorite results are when you search just a little bit. This feels a lot more like asking the model, “What would Bill Hader look like as a cat?”

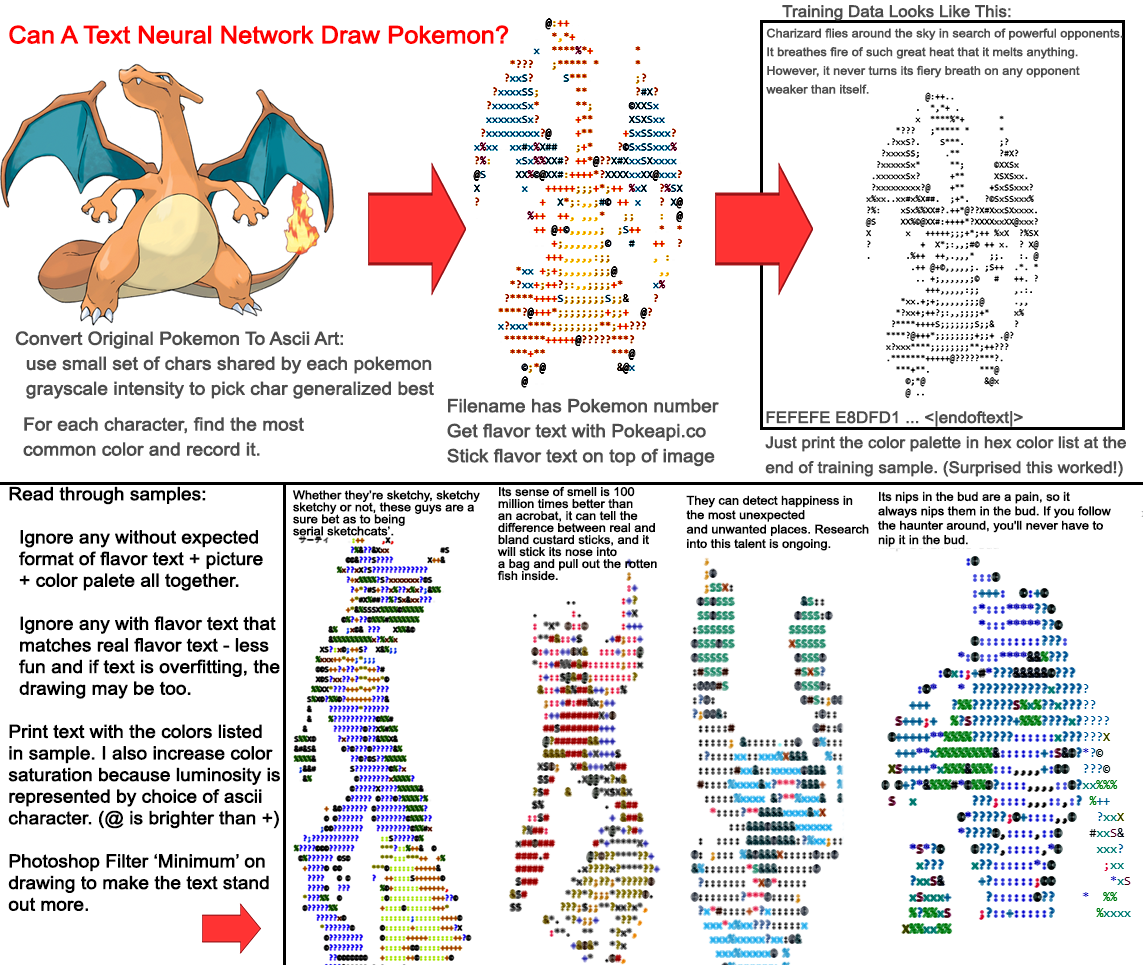

How To Use The Most Advanced Text/Language Model Neural Network In The World To Draw Pokemon.

Placeholder for a later post.

For now just linking some tweets.