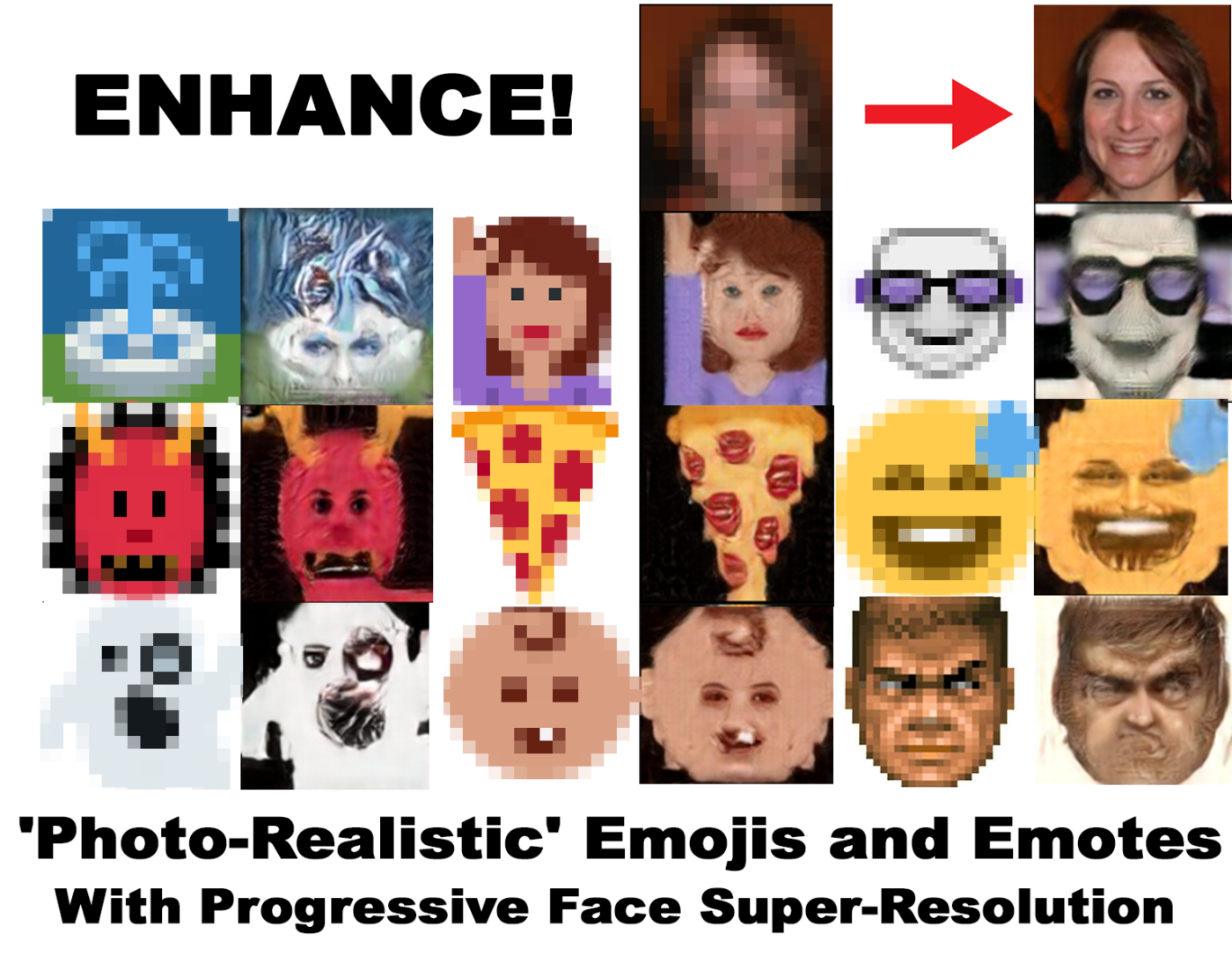

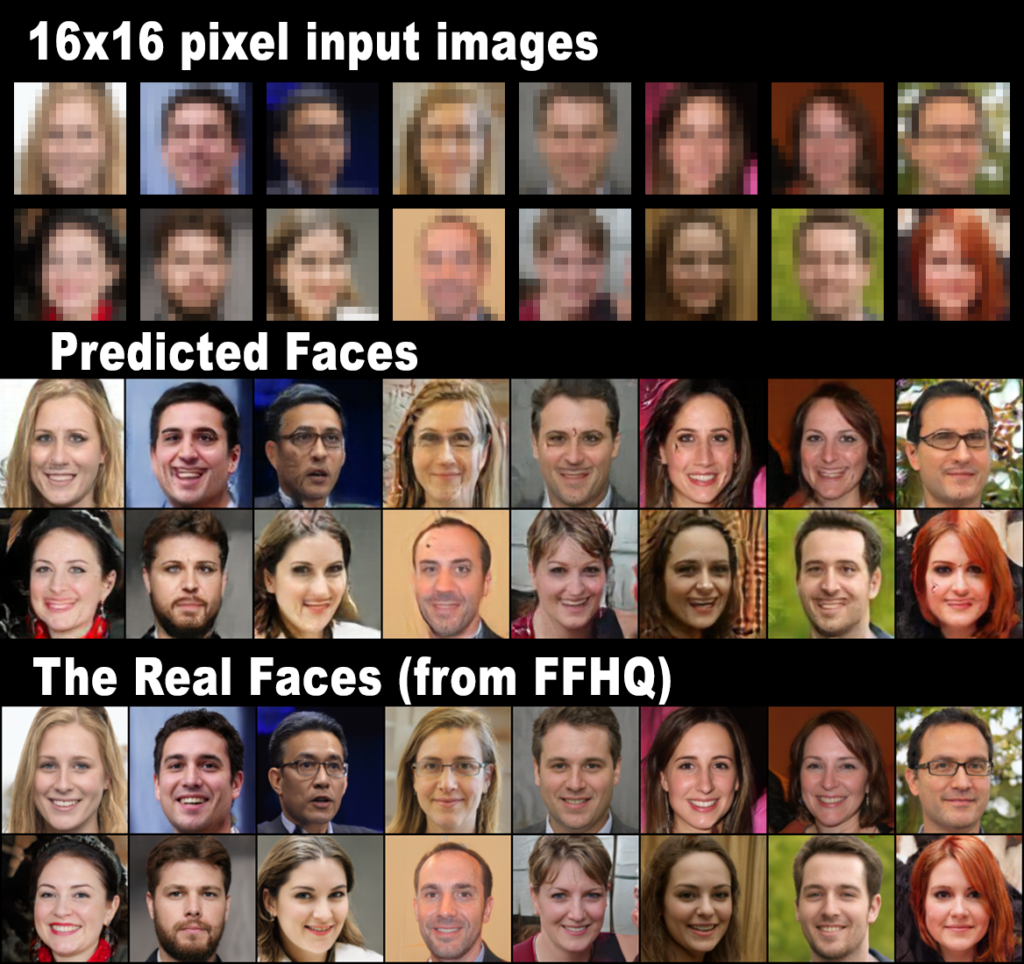

Progressive Face Super-Resolution via Attention to Facial Landmark arxiv.org is a machine learning model trained to reconstruct face images from tiny 16×16 pixel input images, scaling them up to 128×128 with nearly photo-realistic results. Here’s an example:

A detail not mentioned in the official paper, pretty high percentage of Harry-Potter-style scars…🤨

This is a best case scenario for this model. While I didn’t cherry pick these samples, these faces are cropped to the right size, they are roughly aligned, and they were resized to 16×16 pixel input images with the exact same code that was used to train and test the model. This is what this model was built for.